2

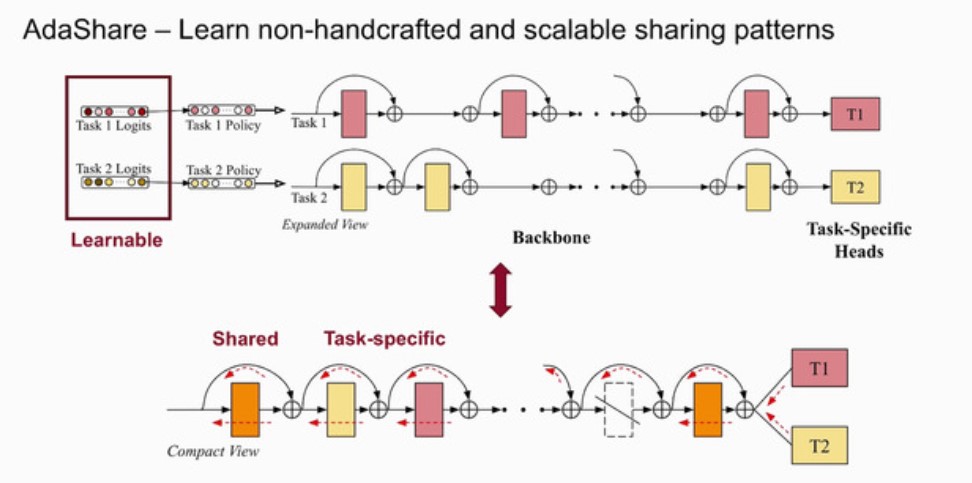

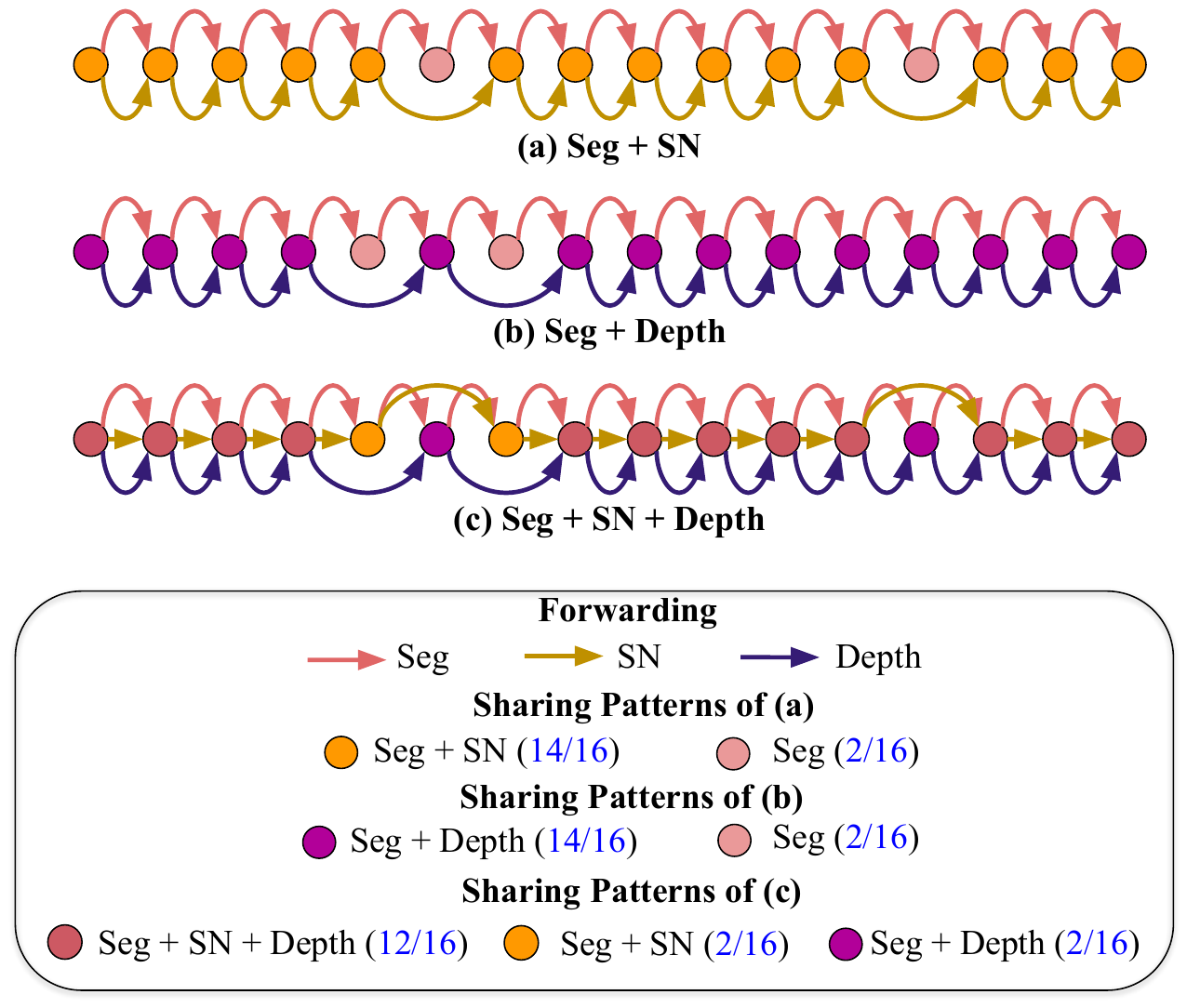

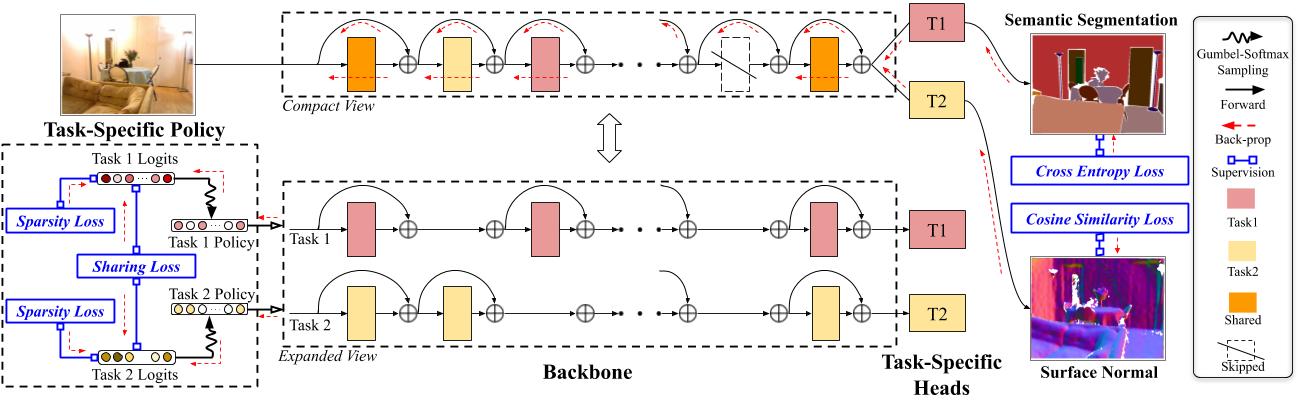

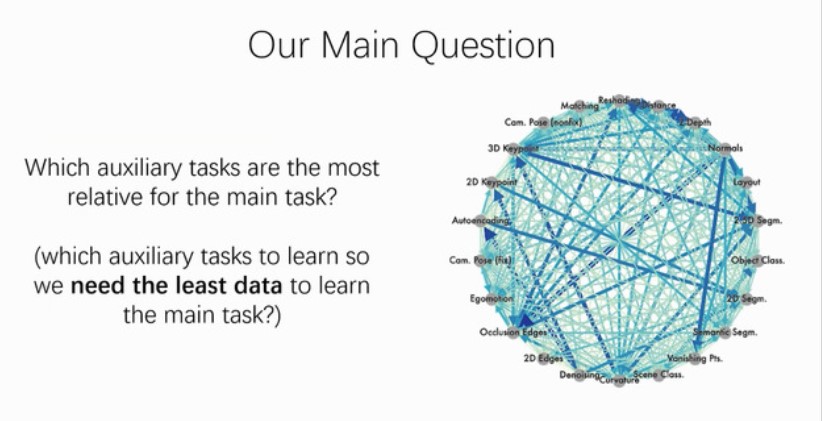

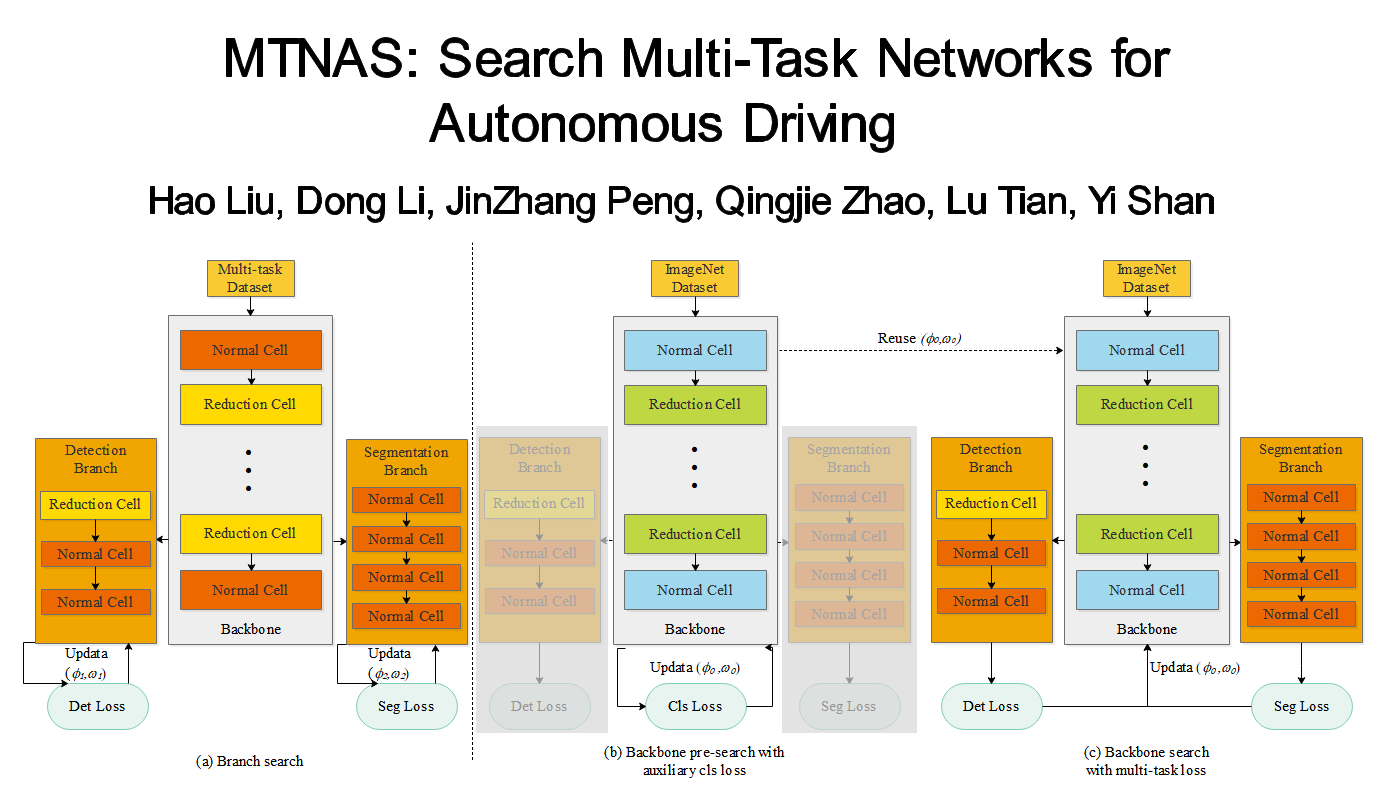

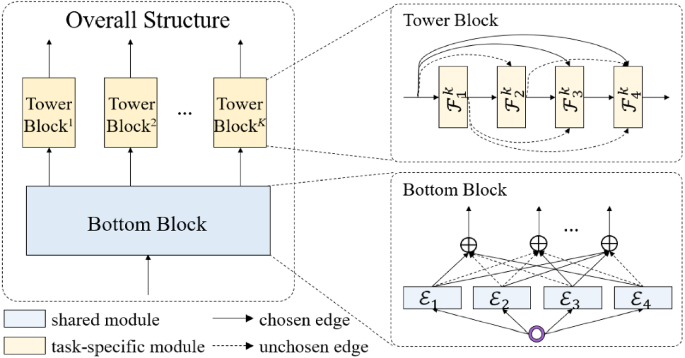

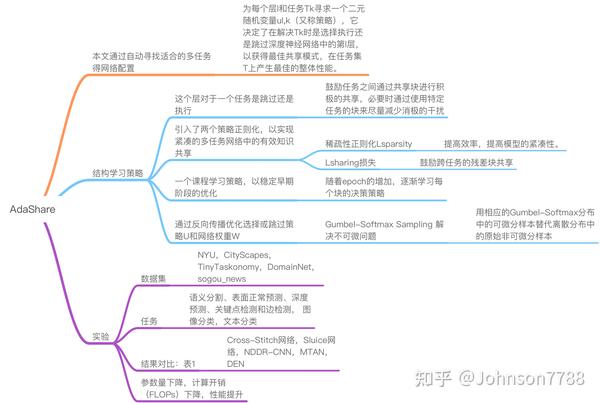

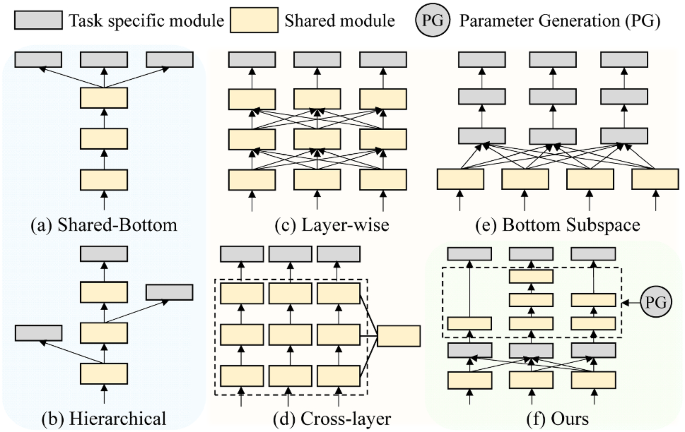

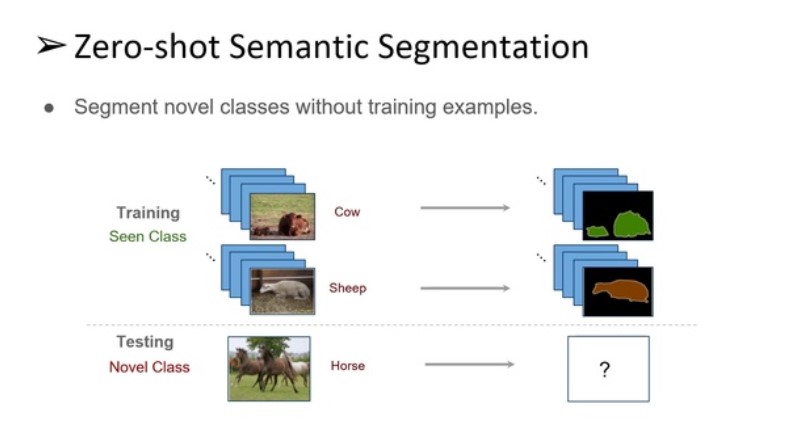

Figure 6 Change in Pixel Accuracy for Semantic Segmentation classes of AdaShare over MTAN (blue bars) The class is ordered by the number of pixel labels (the black line) Compare to MTAN, we improve the performance of most classes including those with less labeled data "AdaShare Learning What To Share For Efficient Deep MultiTask Learning"The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called \textit {AdaShare}, that

Adashare learning what to share for efficient deep multi-task learning

Adashare learning what to share for efficient deep multi-task learning- AdaShare Learning what to share for efficient deep multitask learning In Advances in Neural Information Processing Systems, Shikun Liu, Edward Johns, and Andrew J Davison Endtoend multitask learning with attention In IEEE Conference on Computer Vision and Pattern Recognition, 19The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what

2

Adashare Learning what to share for efficient deep multitask learning arXiv preprint arXiv (19) Google Scholar;AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko;1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision

Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that shareThe typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out atImplementation of SparseChem on an AdaShare architecture GitHub kbardool/AdaSparseChem Implementation of SparseChem on an AdaShare architecture

Adashare learning what to share for efficient deep multi-task learningのギャラリー

各画像をクリックすると、ダウンロードまたは拡大表示できます

|  | |

|  | |

|  | |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

|  |  |

|  | |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

|  |  |

|  | |

|  | |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

|  | |

|  |  |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

| ||

|  |  |

| ||

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

| ||

| ||

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

| ||

| ||

|  | |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

|  |  |

|  | |

|  | |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

| ||

|  | |

| ||

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

|  |  |

|  |  |

|  | |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

|  | |

| ||

|  |  |

「Adashare learning what to share for efficient deep multi-task learning」の画像ギャラリー、詳細は各画像をクリックしてください。

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Friday December 13th, 19 Friday January 10th, kawanokana, 共有 Click to share on Twitter (Opens in newAdashare learning what to share for efficient deep multitask learningMachine Learning Deep LearningRameswar Panda Research Staff Member, MITIBM Watson AI Lab Verified email at ibmcom Homepage Computer Vision Machine Learning Artificial Intelligence Articles

Incoming Term: adashare learning what to share for efficient deep multi-task learning,

0 件のコメント:

コメントを投稿